Technology

Nvidia at CES 2026: Showcasing AI for autonomous driving and next-generation chip designs

At CES 2026 in Las Vegas, Nvidia kicked off the event with a series of major announcements, unveiling new AI hardware platforms, open AI models, and expanded initiatives in autonomous driving, robotics, and personal computing. CEO Jensen Huang confirmed that the company’s next-generation Rubin AI platform is now in production and outlined plans to scale AI across consumer devices, vehicles, and industrial systems throughout the coming year.

One of the most significant reveals was Rubin, Nvidia’s next-generation AI computing platform and the successor to its Blackwell architecture. Rubin is Nvidia’s first “extreme co-designed” platform, meaning that its chips, networking, and software are developed together as a single system rather than separately. The platform, now in full production, is designed to dramatically reduce the cost of generating AI outputs compared to previous systems. By combining GPUs, CPUs, networking, and data-processing hardware, Rubin can efficiently handle large AI models and complex workloads.

Nvidia introduced a new AI-focused storage system aimed at improving the performance of large language models, allowing them to manage long conversations and extensive context windows more efficiently. This enables faster responses while using less power, enhancing overall AI performance.

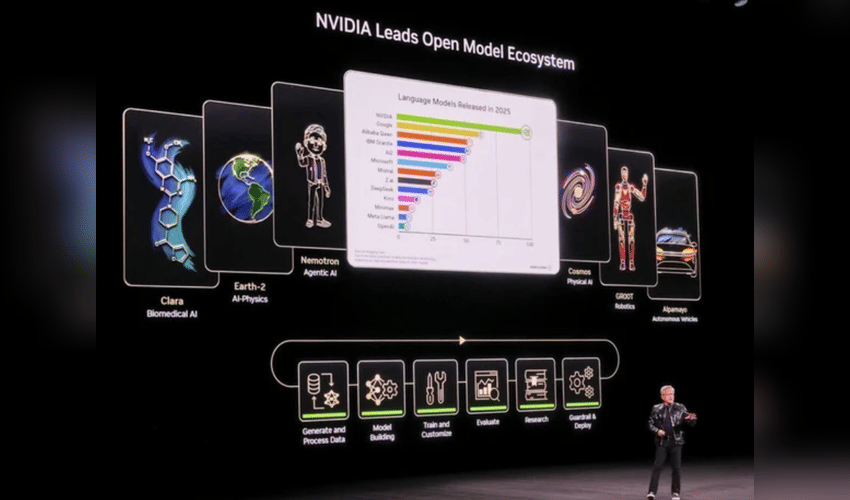

Nvidia also showcased its growing portfolio of open AI models, trained on its supercomputers and available for developers and organizations to build upon. These models are organized by application, spanning healthcare, climate research, robotics, reasoning-based AI, and autonomous driving, providing ready-to-use foundations that can be customized and deployed without starting from scratch. The goal is to accelerate the appearance of AI features in apps, vehicles, and devices by enabling developers to build on existing models rather than creating new ones entirely.

A major focus of Nvidia’s presentation was what it calls physical AI, where AI systems interact with the real world through robots, machines, and vehicles. Nvidia demonstrated how robots and machines are trained in simulated environments before deployment in real-world scenarios. These simulations allow the testing of edge cases, safety protocols, and complex movements that would be challenging or unsafe to recreate physically. At the heart of this effort is Nvidia’s new Cosmos foundation model, trained on videos, robotics data, and simulations. The model can generate realistic videos from a single image, synthesize multi-camera driving scenarios, model edge-case environments from prompts, perform physical reasoning and trajectory prediction, and drive interactive, closed-loop simulations.

Nvidia introduced Alpamayo, a new AI model portfolio specifically designed for autonomous driving. It includes Alpamayo R1, the first open reasoning VLA (vision language action) model for autonomous vehicles, and AlpaSim, a fully open simulation blueprint for high-fidelity autonomous vehicle testing. These models process camera and sensor data, reason about driving scenarios, and determine appropriate vehicle actions, allowing autonomous vehicles to handle complex situations, such as navigating busy intersections without prior experience. Nvidia confirmed that Alpamayo will be integrated into its existing autonomous vehicle software stack, with the first implementation set to appear in the upcoming Mercedes-Benz CLA.